Inside the AI World Model War: Genie 3, World Labs, and MoonLake

An in-depth analysis of Genie 3 vs World Labs vs MoonLake, comparing diffusion video models and 3D world models for AI-powered game development.

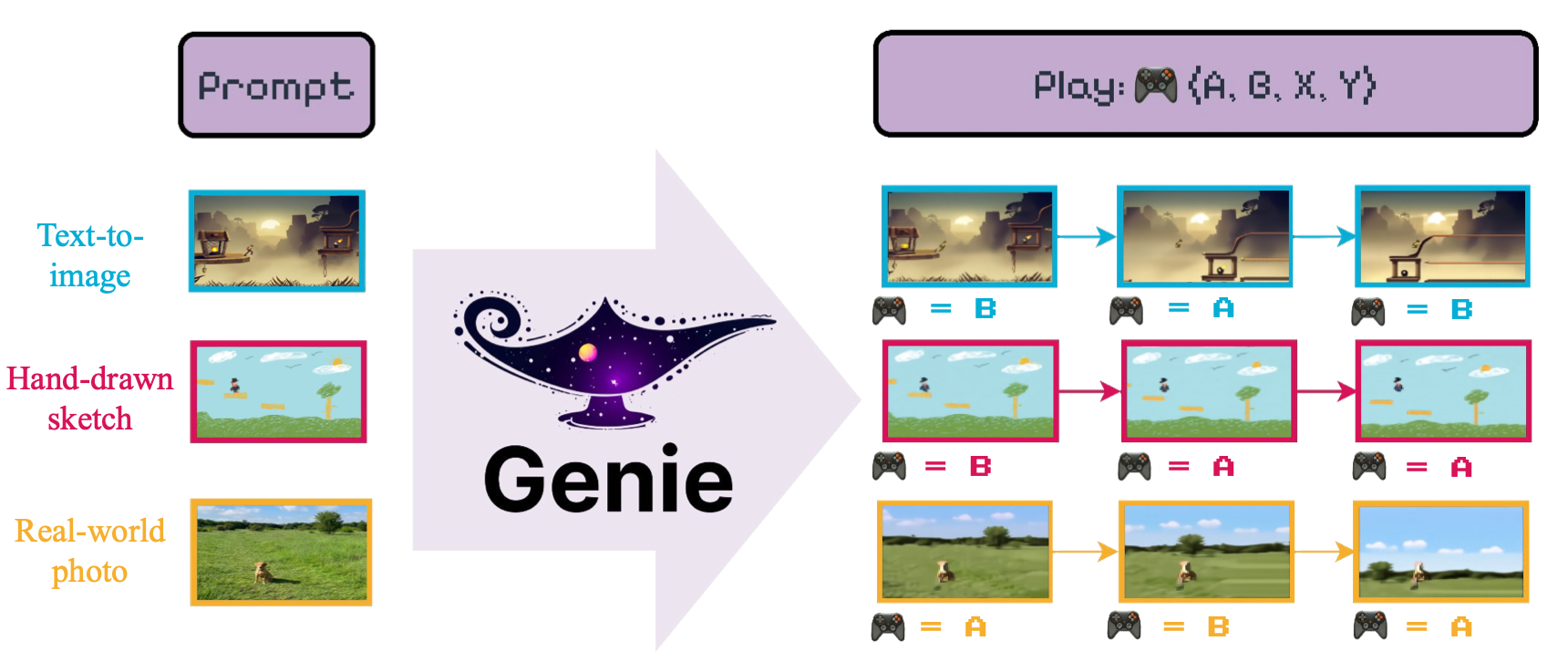

The world model generation space is heating up, and Genie 3 launch has intensified an already fierce competition. In this analysis, we break down how Google DeepMind's Genie 3 compares to other major players - WorldLabs and MoonLake - and what each approach means for game developers and content creators.

Understanding Genie 3's Technical Approach

While Google DeepMind has not released detailed technical papers on Genie 3, the system is essentially built on a diffusion transformer architecture (DiT). Too technical already? 😅 Simply put, DiT replaces the traditional U-Net backbone with a transformer architecture inside the diffusion model.

This is a great lecture to understand transformers in diffusion models.

At its core, Genie 3 functions as a 2D video generator with learned 3D world understanding. The interaction model is quite simple: each frame responds to WASD keyboard inputs. Press A, and the next frame's perspective rotates left. Press W, and you move forward. You can add more controls like jump, run, or look up. But ultimately, they can all be translated into basic transformations - left, right, forward, backward, or adjustments in azimuth and elevation.

This input-conditioned video generation approach means Genie 3 can produce dynamic scenes from the start - flowing water, erupting volcanoes, birds in flight. The physics aren't perfect yet; you will notice inconsistencies here and there. But the results are already remarkably close to what we see in modern 3D games.

How can Genie 3 visualize a 3D-like world so convincingly? Most likely I suspect because it was trained on millions - perhaps billions - of YouTube videos 📺 📺 📺 . While YouTube videos are technically 2D (MP4), they are recordings of a 3D world. Camera motion, perspective shifts, occlusion, lighting changes - all of these contain implicit 3D signals.

So even without explicit 3D supervision, large-scale video training allows the model to internalize statistical patterns of how a 3D world behaves. In that sense, YouTube itself becomes a massive, indirect source of 3D understanding. That is why a model trained on such data can appear to “understand” depth, motion, and spatial consistency - even though it operates purely in 2D pixel space.

So the real question becomes: is pure 2D real-time video representation - an effective foundation for building a game? Personally, I don’t think so 🧐. At least not today. Generating real-time video through large diffusion models is extremely expensive at inference. You are effectively re-rendering the entire scene, frame by frame, through a massive neural network. In contrast, traditional 3D rendering pipelines are highly optimized. Once geometry, lighting, and materials are defined, rendering additional frames is relatively cheap and deterministic. (That is why Claythis offers image-to-3D and text-to-3D along with automatic rigging and retargeting for game ready 3D characters)

Relative Inference Cost (Conceptual)

Text generation ▓

Image generation ▓▓▓▓▓▓▓▓▓▓▓▓▓▓

Video generation ▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓

From an energy and cost-efficiency standpoint, replacing a structured 3D engine with a full generative video model feels wasteful. However - let me partially take that back. If GPU costs drop dramatically and network bandwidth becomes near-instant and inexpensive or a much more efficient architecture comes out, the equation could change. In that world, a Genie 4, or 5-style approach might start to make practical sense. Instead of painstakingly designing 3D assets object by object - a process that consumes enormous human labor - you could simply stream the world as it is generated. From a development perspective, that could be radically productive. No manual modeling. No manual rigging. No asset pipelines. Just generation and streaming.

WorldLabs: The Gaussian Splatting Native

WorldLabs takes a fundamentally different approach, building their world model natively on 3D Gaussian Splatting (GS). If you've worked with Gaussian Splatting before, you will immediately recognize the characteristic GS artifacts in their Marble demo. It is free and incredibly easy to try directly in your browser. I highly recommend giving it a try - you will immediately notice the difference between native 3D GS and 2D video generation. It is one of those things that is much clearer when you see it for yourself.

Gaussian Splatting itself does not include colliders, so in their demo you can actually pass through walls. Yes, World Labs can generate meshes as well - but because those meshes are converted from Gaussian Splatting representations, the topology quality often degrades significantly. More importantly, their demo worlds are static. This may be the most critical difference between Genie 3 and World Labs: Genie’s video-native approach handles dynamic scenes naturally (remember the water splash?), whereas introducing motion into a GS-based generator presents substantial technical challenges.

Oh yeah I can pass through the wall! 😆😆😆

The proven method for dynamic GS scenes requires converting splats into explicit meshes first, then separating objects, adding colliders, and applying motion. Oh wait, isn't it the traditional game development pipeline that AI was supposed to simplify?

That said, Gaussian Splatting remains a widely adopted representation method in academia. WorldLabs itself is one of the best-funded teams in the Bay Area, having raised $230 million and currently pursuing a $5 billion valuation. We should not underestimate how quickly they can move. Innovation cycles in generative AI have been wildly unpredictable - no one anticipated this level of acceleration, especially in vibe-coding, just three years ago. Things that seem technically constrained today could look very different in a short amount of time.

On top of that, World abs’ Marble has already been showcased with several game studios. I would like to mention my fellow game entrepreneur, Jani (founder of BitMagic), who shared a demo of his team working with Gaussian Splatting from WorldLabs. The demo looks genuinely impressive and practical. At the end of the day, you need a scene to build a game - and being able to generate an entire environment from just a few text prompts can save enormous time and effort in early production.

However, their game studio partnerships feel more like showcase byproducts than their core focus. I believe their real pitch likely aligns more closely with Yann LeCun's vision of world model building - understanding and simulating reality rather than shipping game-ready environment. You know… to build a true humanoid, we need physical AI - and to build physical AI, we need a robust world model.

MoonLake: The New Approach

Full disclosure: Claythis shares a building with MoonLake (eh... so what?😅)

MoonLake has not publicly launched their product yet, but based on their X/Twitter presence and recent hackathon results, they appear to be working on three products:

All generated directly through our prompt-based chatbox:

— Moonlake (@moonlake) February 11, 2026

– 3D assets

– Characters

– Animations

– Game mechanics

– Scene generation

– Skyboxes

Our web app is now rolling out, come try it for yourself. pic.twitter.com/SnUe77vZM1

Their recent demo for the engine. It looks like native 3D.

- Web-based 3D game engine powered by LLM: This overlaps with what Bezi is doing (they actually pivoted hard from "easier 3D design on web" to "LLM for Unity"). Not sure whether MoonLake is trying to build a game engine from scratch, but I personally think Bezi's approach - working around Unity - makes practical sense. However, there is an argument that building a new lighter engine already optimized for LLM workflows might be easier and faster ultimately. I believe they will launch the engine pretty soon. Only time will tell.

- Reverie: Real-time video style transfer for interactive scenes: This is another interesting direction: a hybrid approach to playable video generation. I haven’t tested it myself yet, so this is partly speculative, but it suggests a different workflow: build your game using minimal placeholder blocks first (much like any Unity tutorial), then swap those bare-bones scenes with high-quality rendered visuals at runtime. In theory, this could dramatically accelerate game production timelines. The catch? Inference costs💰💰💰. The same fundamental challenge Genie faces today. But three years from now, the economics could look very different.

- 2D sprite game generator: There is very limited public information available, so I don’t have much to comment on. But broadly speaking, with the right vibe-coding setup, developers can already build web-based games today. That's why I don’t believe that is their primary focus.

Reverie: from MoonLake website

The same conclusion: Not yet but...

The competition in world model generation is producing rapid innovation, but we are still in the early innings. None of these solutions are ready to replace traditional 3D game development pipelines today - but the gap is closing faster than many expected.

We will almost certainly see many more GenAI-powered tools for game developers. In the near term, these tools will likely remain complementary to existing pipelines. But at some point, we may see a more fundamental shift. When will it happen? I think it might be very soon. Actually, I have a thesis on that. It is about AI-native game genres - and I suspect that is what will ultimately trigger the real transformation. Stay tuned!

This analysis is part of Claythis' ongoing coverage of generative AI developments in 3D content creation. Follow us for more insights on the evolving landscape of AI-powered game development tools.